And thus Nvidia keeps the money faucet running. Sorry AI companies, we’ve just created the latest and greatest. We know you have invested trillions already, but you need the hot new thing or the competition gets it and you are obsolete. Time to invest some more!

All three components will be in production this time next year

OK we will see then how good they are.

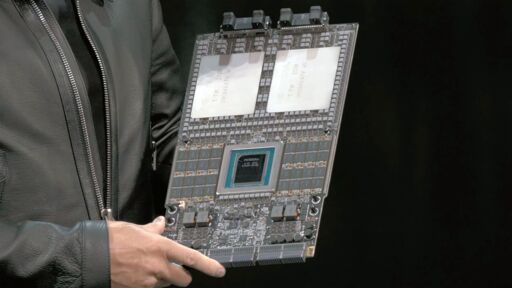

Pretty awful pictures, hard to see if the monster in the center of the board is a single chip or chiplet design? But it looks like a single chip, so guaranteed to be insanely expensive to make.Let’s hope AMD or others can really break into the compute market with some more competitive products.

It’s definitely chiplet, as the article points out. You can see the seams.

14 GB of vRAM?

288gb of vram/gpu

For anyone - including, apparently, this article’s author’s - who is confused about the form factor, this is the next generation to follow the Grace Blackwell “GB300-NVL72”, their full rack interconnected system.

It’s the same technology as the matching 8-GPU “HGX” board that is built into larger individual servers - which in this generation’s case is just called “B300” as it has the “Blackwell” GPU but not the “Grace” CPU - but not sold in smaller units than an entire rack.

Here are some pictures and a video of that NVL72 version (you can buy these from Dell and others, as well as direct from Nvidia):

https://www.servethehome.com/dell-and-coreweave-show-off-first-nvidia-gb300-nvl72-rack/ https://www.ingrasys.com/solutions/NVIDIA/nvidia_gb300_nvl72/

The full rack has 18 compute trays, each with 2 of the pictured board inside (for a total of 36 CPUs and 72 GPUs), and 9 NVLink switch trays that connect every GPU together. PSUs and networking make up the rest.

Kinda odd. 8 GPUs to a CPU is pretty much standard, and less ‘wasteful,’ as the CPU ideally shouldn’t do much for ML workloads.

Even wasted CPU aside, you generally want 8 GPUs to a pod for inference, so you can batch a model as much a possible without physically going ‘outside’ the server. It makes me wonder if they just can’t put as much PCIe/NVLink on it as AMD can?

LPCAMM is sick though. So is the sheer compactness of this thing; I bet HPC folks will love it.

Yeah, 88/2 is weird as shit. Perhaps the GPUs are especially large? I know NVIDIA has that thing where you can slice up a GPU into smaller units (I can’t remember what it’s called, it’s some fuckass TLA), so maybe they’re counting on people doing that.

They could be ‘doubled up’ under the heatspreader, yeah, so kinda 4x GPUs to a CPU.

And yeah… perhaps they’re maintaining CPU ‘parity’ with 2P EPYC for slicing it up into instances.

Finally, I get good frame rate in Monster Hunter: Wilds

Sorry, no OpenGL support.

What’s the connection with Vera Rubin? Is this used to process data from the Vera Rubin observatory?

They’ve been using scientists names for their stuff for a long time now. Pascal, Turing, etc.