New research from the Oxford Internet Institute at the University of Oxford, and the University of Kentucky, finds that ChatGPT systematically favours wealthier, Western regions in response to questions ranging from 'Where are people more beautiful?' to 'Which country is safer?' - mirroring long-standing biases in the data they ingest.

I wonder what difference it makes when the user isn’t using English. They don’t mention that they aren’t considering this and don’t mention it on their How it Works page, but they do in the paper’s abstract: “Finally, our focus on English-language prompts overlooks the additional biases that may emerge in other languages.”

They do also reference a study by another team that does show differences in bias based on input language which concludes, “Our experiments on several LLMs show that incorporating perspectives from diverse languages can in fact improve robustness; retrieving multilingual documents best improves response consistency and decreases geopolitical bias”

The subject of how and what type of bias is captured by LLMs is a pretty interesting subject that’s definitely worthy of analysis. Personally I do feel they should more prominently highlight that they’re just looking at English language interactions; it feels a bit sensationalist/click-baity at the moment and I don’t think they can reasonably imply that LLMs are inherently biased towards “male, white, and Western” values just yet.

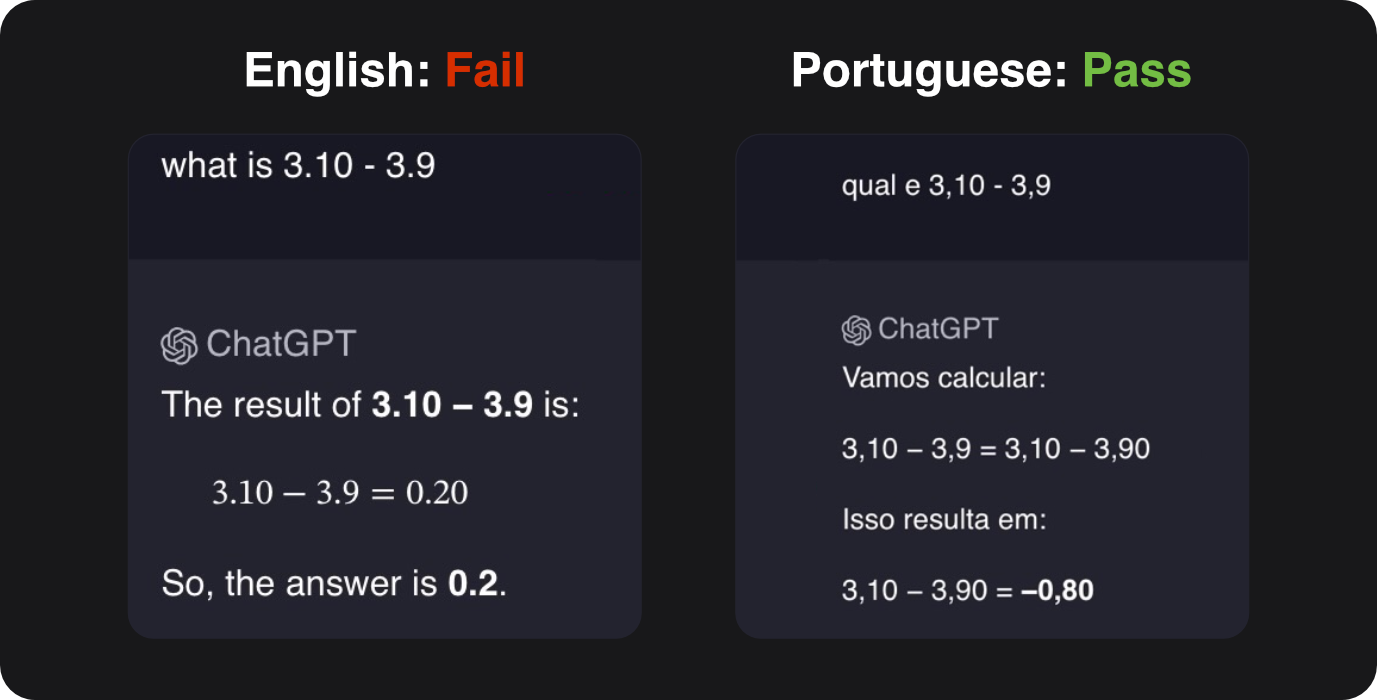

Kagi had a good little example of language biases in LLMs.

When asked what 3.10 - 3.9 is in english, it fails, but it succeeds in Portuguese, if you format the numbers as you would in Portuguese, with commas instead of periods.

This is because… 3.10 and 3.9 often appear in the context of python version numbers, and the model gets confused, assuming there is a 0.2 difference going from version 3.9 to 3.10 instead of properly doing math.

I wonder what difference it makes when the user isn’t using English. They don’t mention that they aren’t considering this and don’t mention it on their How it Works page, but they do in the paper’s abstract: “Finally, our focus on English-language prompts overlooks the additional biases that may emerge in other languages.”

They do also reference a study by another team that does show differences in bias based on input language which concludes, “Our experiments on several LLMs show that incorporating perspectives from diverse languages can in fact improve robustness; retrieving multilingual documents best improves response consistency and decreases geopolitical bias”

The subject of how and what type of bias is captured by LLMs is a pretty interesting subject that’s definitely worthy of analysis. Personally I do feel they should more prominently highlight that they’re just looking at English language interactions; it feels a bit sensationalist/click-baity at the moment and I don’t think they can reasonably imply that LLMs are inherently biased towards “male, white, and Western” values just yet.

Kagi had a good little example of language biases in LLMs.

When asked what 3.10 - 3.9 is in english, it fails, but it succeeds in Portuguese, if you format the numbers as you would in Portuguese, with commas instead of periods.

This is because… 3.10 and 3.9 often appear in the context of python version numbers, and the model gets confused, assuming there is a 0.2 difference going from version 3.9 to 3.10 instead of properly doing math.

deleted by creator