- 5 Posts

- 83 Comments

1·4 months ago

1·4 months agoApparently Nintendo switch 2 is using the standard already, so it might go over better than Sony.

1·7 months ago

1·7 months agoGlobal interpreter lock

2·2 years ago

2·2 years agoYup, I’ve been plagued by this bug for a long time. I’m very excited to use this!

2·2 years ago

2·2 years agodeleted by creator

1·2 years ago

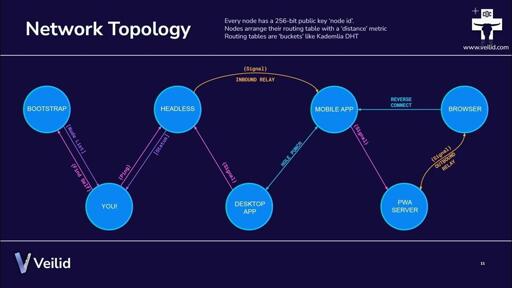

1·2 years agoCentralization is likely the unintended end result of the internet. Consider a mesh network where all the links have even throughput. Now suddenly one node has some content that goes viral. Everyone wants to access that data. Suddenly that node needs to support a link that’s much wider because everyone’s requests accumulate there.

Someone goes and upgrades that link. Well now they can serve many more other nodes so they start advertising to put others’ viral information on the node with larger link.

My friend, let me tell you a story during my studies when I had to help someone find a bug in their 1383-line long main() in C… on the other hand I think Ill spare you from the gruesome details, but it took me 30 hours.

The Test part of TDD isn’t meant to encompass your whole need before developing the application. It’s function-by function based. It also forces you to not have giant functions. Let’s say you’re making a compiler. First you need to parse text. Idk what language structure we are doing yet but first we need to tokenize our steam. You write a test that inputs

hello worldinto your tokenizer then expects two tokens back. You start implementing your tokenizer. Repeat for parser. Then you realize you need to tokenize numbers too. So you go back and make a token test for numbers.So you don’t need to make all the tests ahead of time. You just expand at the smallest test possible.

On regular desktop environments I really like Guake - it’s a drop down terminal emulator similar to how old games used to do it. It’s nice for quick use here and there. Though these days I just run tilling wm with xfce-terminal. It gets the job done and still looks good.

3·2 years ago

3·2 years agoI see your edit but in case you’re interested - a capacitor is technically a 0 resistance battery for DC.

46·2 years ago

46·2 years agoWhat was that about him doing twitter’s technology policing and leaving running the company to the new CEO?

3·2 years ago

3·2 years agoYour own article says it’s VMs. The tpm itself can be bricked. Ok that sucks. Still not persistent like you describe.

1·2 years ago

1·2 years agoI haven’t worked directly on gov cloud but I’m familiar with its design. The two systems are completely isolated from each other with internet in between. I know you can port forward in AWS so a solution would be to spin up a VPN server in AWS and connect to it from gov cloud.

2·2 years ago

2·2 years agoNo they don’t. Worst case known attacks have resulted in insecure keys being generated. And even if malware could somehow be transferred out of it you wouldn’t have to trash your whole computer - just unplug the TPM

2·2 years ago

2·2 years agoI’m unable to look at the exact config screen but I remember you could configure the stick to map to absolute mouse position in deck’s configuration.

4·2 years ago

4·2 years agoTpm modules are pretty good. And you can buy them separately like another card. Motherboards usually have a slot for them. They are tiny like usb drives. They essentially are usb derives but for your passwords and keys. You can even configure Firefox to store your passwords in tpm

Yeah I had a similar feel.

Is this a homework assignment?

Just in case you might find it interesting: https://en.wikipedia.org/wiki/DOT_(graph_description_language)

1·2 years ago

1·2 years agoThat’s actually a decently good analogy, though a random redditor is still smarter than ChatGPT because they can actually analyze google results, not just match situations and put them together.

Its an interesting perspective, except… that’s not how AI works (even if it’s advertised that way). Even the latest approach for ChatGPT is not perfect memory. It’s a glorified search functionality. When you type a prompt the system can choose to search your older chats for related information and pull it into context… what makes that information related is the big question here - it uses an embedding model to index and compare your chats. You can imagine it as a fuzzy paragraph search - not exact paragraphs, but paragraphs that roughly talk about the same topic…

it’s not a guarantee that if you mention not liking sushi in one chat - talking about restaurant of choice will pull in the sushi chat. And even if it does pull that in, the model may choose to ignore that. And even if it doesn’t ignore that - You can choose to ignore that. Of course the article talks about healing so I imagine instead of sushi we’re talking about some trauma…. Ok so you can choose not to reveal details of your trauma to AI(that’s an overall good idea right now anyway). Or you can choose to delete the chat - it won’t index deleted chats.

At the same time - there are just about as many benefits of the model remembering something you didn’t. You can imagine a scenario where you mentioned your friend being mean to you and later they are manipulating you again. Maybe having the model remind you of the last bad encounter is good here? Just remember - AI is a machine and you control both its inputs and what you’re to do with its outputs.