The research from Purdue University, first spotted by news outlet Futurism, was presented earlier this month at the Computer-Human Interaction Conference in Hawaii and looked at 517 programming questions on Stack Overflow that were then fed to ChatGPT.

“Our analysis shows that 52% of ChatGPT answers contain incorrect information and 77% are verbose,” the new study explained. “Nonetheless, our user study participants still preferred ChatGPT answers 35% of the time due to their comprehensiveness and well-articulated language style.”

Disturbingly, programmers in the study didn’t always catch the mistakes being produced by the AI chatbot.

“However, they also overlooked the misinformation in the ChatGPT answers 39% of the time,” according to the study. “This implies the need to counter misinformation in ChatGPT answers to programming questions and raise awareness of the risks associated with seemingly correct answers.”

You have no idea how many times I mentioned this observation from my own experience and people attacked me like I called their baby ugly

ChatGPT in its current form is good help, but nowhere ready to actually replace anyone

A lot of firms are trying to outsource their dev work overseas to communities of non-English speakers, and then handing the result off to a tiny support team.

ChatGPT lets the cheap low skill workers churn out miles of spaghetti code in short order, creating the illusion of efficiency for people who don’t know (or care) what they’re buying.

“Major new Technology still in Infancy Needs Improvements”

– headline every fucking day

“Will this technology save us from ourselves, or are we just jerking off?”

GPT-2 came out a little more than 5 years ago, it answered 0% of questions accurately and couldn’t string a sentence together.

GPT-3 came out a little less than 4 years ago and was kind of a neat party trick, but I’m pretty sure answered ~0% of programming questions correctly.

GPT-4 came out a little less than 2 years ago and can answer 48% of programming questions accurately.

I’m not talking about mortality, or creativity, or good/bad for humanity, but if you don’t see a trajectory here, I don’t know what to tell you.

Speaking at a Bloomberg event on the sidelines of the World Economic Forum’s annual meeting in Davos, Altman said the silver lining is that more climate-friendly sources of energy, particularly nuclear fusion or cheaper solar power and storage, are the way forward for AI.

“There’s no way to get there without a breakthrough,” he said. “It motivates us to go invest more in fusion.”

It’s a good trajectory, but when you have people running these companies saying that we need “energy breakthroughs” to power something that gives more accurate answers in the face of a world that’s already experiencing serious issues arising from climate change…

It just seems foolhardy if we have to burn the planet down to get to 80% accuracy.

I’m glad Altman is at least promoting nuclear, but at the same time, he has his fingers deep in a nuclear energy company, so it’s not like this isn’t something he might be pushing because it benefits him directly. He’s not promoting nuclear because he cares about humanity, he’s promoting nuclear because has deep investment in nuclear energy. That seems like just one more capitalist trying to corner the market for themselves.

Seeing the trajectory is not ultimate answer to anything.

Perhaps there is some line between assuming infinite growth and declaring that this technology that is not quite good enough right now will therefore never be good enough?

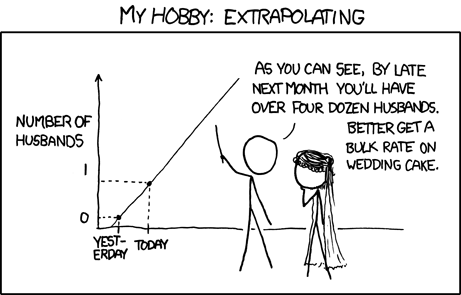

Blindly assuming no further technological advancements seems equally as foolish to me as assuming perpetual exponential growth. Ironically, our ability to extrapolate from limited information is a huge part of human intelligence that AI hasn’t solved yet.

will therefore never be good enough?

no one said that. but someone did try to reject the fact it is demonstrably bad right now, because “there is a trajectory”.

People down vote me when I point this out in response to “AI will take our jobs” doomerism.

Even if AI is able to answer all questions 100% accurately, it wouldn’t mean much either way. Most of programming is making adjustments to old code while ensuring nothing breaks. Gonna be a while before AI will be able to do that reliably.

So this issue for me is this:

If these technologies still require large amounts of human intervention to make them usable then why are we expending so much energy on solutions that still require human intervention to make them usable?

Why not skip the burning the planet to a crisp for half-formed technology that can’t give consistent results and instead just pay people a living fucking wage to do the job in the first place?

Seriously, one of the biggest jokes in computer science is that debugging other people’s code gives you worse headaches than migraines.

So now we’re supposed to dump insane amounts of money and energy (as in burning fossil fuels and needing so much energy they’re pushing for a nuclear resurgence) into a tool that results in… having to debug other people’s code?

They’ve literally turned all of programming into the worst aspect of programming for barely any fucking improvement over just letting humans do it.

Why do we think it’s important to burn the planet to a crisp in pursuit of this when humans can already fucking make art and code? Especially when we still need humans to fix the fucking AIs work to make it functionally usable. That’s still a lot of fucking work expected of humans for a “tool” that’s demanding more energy sources than currently exists.

They don’t require as much human intervention to make the results usable as would be required if the tool didn’t exist at all.

Compilers produce machine code, but require human intervention to write the programs that they compile to machine code. Are compilers useless wastes of energy?

Compilers are deterministic and you can reason about how they came to their results, and because of that they are useful.

There is a good chance that it is instrumental in discoveries that lead to efficient clean energy. It’s not as if we were at some super clean, unabused planet before language models came along. We have needed help for quite some time. Almost nobody wants to change their own habits(meat, cars, planes, constant AC and heat…), so we need something. Maybe AI will help in this endevour like it has at so many other things.

There is a good chance that it is instrumental in discoveries that lead to efficient clean energy

There is exactly zero chance… LLMs don’t discover anything, they just remix already existing information. That is how it works.

This is a common misunderstanding of what it means to discover new things. New things are just remixing old things. For example, AI has discovered new matrix multiplications, protein foldings, drugs, chess/go/poker strategies, and much more that are all far superior to anything humans have ever come up with in these fields. In all these cases, the AI was just combining old things in new ways. Even Einstein was just combining old things into new ways. There is exactly zero chance that AI will all of a sudden quit making new discoveries all of a sudden.

It’s also “discovered” multitudes more that are complete nonsense.

Just a slight correction. ML/AI has aided in all sorts of discoveries, GenAI is a “remixing of existing concepts”. I don’t believe I’ve read, nor does the underlying principles really enable, anything regarding GenAI and discovering new ways to do things.

For example, AI has discovered

no, people have discovered. llms were just a tool used to manipulate large sets of data (instructed and trained by people for the specific task) which is something in which computers are obviously better than people. but same as we don’t say “keyboard made a discovery”, the llm didn’t make a discovery either.

that is just intentionally misleading, as is calling the technology “artificial intelligence”, because there is absolutely no intelligence whatsoever.

and comparing that to einstein is just laughable. einstein understood the broad context and principles and applied them creatively. llm doesn’t understand anything. it is more like a toddler watching its father shave and then moving a lego piece accross its face pretending to shave as well, without really understaning what is shaving.

I didn’t say LLMs made these discoveries. They didn’t. AI made those discoveries. Yes, it is true that humans made AI, so in a way, humans made the discoveries, but if that is your take, then it is impossible for AI to ever make any discovery. Really, if we take this way of thinking to its natural conclusion, then even humans can never make discoveries, only the universe can make discoveries, since humans are a result of the universe “universing”. It is arbitrary to try to credit humans with anything that happens further down their evolution.

Humans tried for a long time to get good at chess, and AI came along and made the absolute best chess players utterly irrelevant even if we give a team of the worlds best chessplayers an endless clock and thr AI a single minute for the entire game. That was 20 years ago. This is happening in more and more fields and showing no sign of stopping. We don’t know yet if discoveries will come from future LLMs like theybm have from other forms of AI, but we do know that with each generation more and more complex patterns are being identified and utilized by LLMs. 3 years ago the best LLMs would have scored single digits on IQ test, now they are triple digits, it is laughable to think that anyone knows where the current rapid trajectory will stop for this new technology, and much more laughable to think we are already at the end.

AI made those discoveries. Yes, it is true that humans made AI, so in a way, humans made the discoveries, but if that is your take, then it is impossible for AI to ever make any discovery.

if this is your take, then lot of keyboard made a lot of discovery.

AI could make a discovery if there was one (ai). there is none at the moment, and there won’t be any for any foreseeable future.

tool that can generate statistically probable text without really understanding meaning of the words is not an intelligence in any sense of the word.

your other examples, like playing chess, is just applying the computers to brute-force through specific mundane task, which is obviously something computers are good at and being used since we have them, but again, does not constitute a thinking, or intelligence, in any way.

it is laughable to think that anyone knows where the current rapid trajectory will stop for this new technology, and much more laughable to think we are already at the end.

it is also laughable to assume it will just continue indefinitely, because “there is a trajectory”. lot of technology have some kind of limit.

and just to clarify, i am not some anti-computer get back to trees type. i am eager to see what machine learning models will bring in the field of evidence based medicine, for example, which is something where humans notoriously suck. but i will still not call it “intelligence” or “thinking”, or “making a discovery”. i will call it synthetizing so much data that would be humanly impossible and finding a pattern in it, and i will consider it cool result, no matter what we call it.