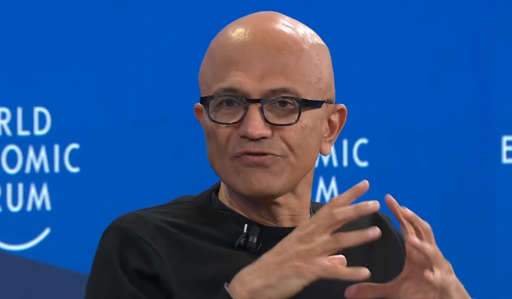

Workers should learn AI skills and companies should use it because it’s a “cognitive amplifier,” claims Satya Nadella.

in other words please help us, use our AI

“Cognitive amplifier?” Bullshit. It demonstrably makes people who use it stupider and more prone to believing falsehoods.

I’m watching people in my industry (software development) who’ve bought into this crap forget how to code in real-time while they’re producing the shittiest garbage I’ve laid eyes on as a developer. And students who are using it in school aren’t learning, because ChatGPT is doing all their work - badly - for them. The smart ones are avoiding it like the blight on humanity that it is.

As evidence: How the fuck is a company as big as Microsoft letting their CEO keep making such embarassing public statements? How the fuck has he not been forced into more public speaking training by the board?

This is like the 4th “gaffe” of his since the start of the year!

You don’t usually need “social permission” to do something good. Mentioning that is at best, publicly stating that you think you know what’s best for society (and they don’t). I think the more direct interpretation is that you’re openly admitting you’re doing the type of thing that you should have asked permission for, but didn’t.

This is past the point of open desperation.

Love your name.

Wild guess here is the social one is the one where most countries has allowed them to do what it takes and special contract deals.

Likely not public socially. At least, I doubt that.

Last time they were crying that nobody wanted it and made the word bad. It’s all kinda strategy to converse most amount of people you can. Like other users mentioned above the post of people in their org using gpt. I see this too in my org and by variety or engineers or regular folks and I face palm every time because you get responses that roughly makes sense but contextually are horrendously poor and misunderstood entirely.

Desperation probably because they invested so much money on something of a demand that doesn’t even exit yet.

deleted by creator

And they are all getting dependent and addicted to something that is currently almost “free” but the monetization of it all will soon come in force. Good luck having the money to keep paying for it or the capacity to handle all the advertisement it will soon start to push out. I guess the main strategy is manipulate people into getting experience with it with these 2 or 3 years basically being equivalent to a free trial and ensuring people will demand access to the tools from their employees which will pay from their pockets. When barely anyone is able to get their employers to pay for things like IDEs… Oh well.

We watched this exact same tactic happen with Xbox gamepass over the last 5 years. They introduced it and left in the capability to purchase the “upgrade” for $1/year. Now they are suddenly cranking it up to $30/month and people are still paying it because they feel like it’s a service they “have to have”.

Which is why I’ve dropped it myself. Not worth the price.

I’m usually too lazy when it comes to canceling things, but I canceled Game Pass right away. $30 per month is just offensive.

Agreed

Is it $30/month on top of Xbox gold or does the game pass include it?

It’s included, but good lord if that’s not a very high price for temporary access to a collection of bargain bin games. You could buy a full price game every other month for that money.

or 3-6 indies on sale - hell if you save up that money you can go nuts every steam/gog same, 360$ should get you around 1k$ games retail price upwards if you are a patient gamer

Edit: and you can KEEP that, not temporary

On top of that, I have personally developed some gaming habits that I don’t care for at all as a direct result of gamepass.

Subscriptions are thieves of intentionality

What type of habits?

The theoretically vast availability has made me quick to abandon games that didn’t deserve it. I’m having a lot of difficulty committing to even some objectively good games. I don’t enjoy the bouncing around and yet I keep doing it. It feels related to FOMO.

Gold doesn’t exist anymore. Now it’s game pass core or something…the rate went up with that forced “migration”. You do get access to a few “free” games with core, but you gotta pay way more to have the full deal. I think core (which is the cheapest, baseline option) is $70/yr now? (Edit- i just checked my statement, it’s $78.50)

This recent massive price hike (it fucking doubled) is what got me to cancel my live, completely.

I’ve been subscribed since 2002, when it first released. So their greed lost a sure stream of income. I’m not alone.

Small sample but everyone i know dropped it on the increase to 30 bucks. One of them had been primarily playing PlayStation and xbox for the last decade but has gotten and primarily plays steam deck now.

Textbook example of enshittification.

Renting is always going to end up the same way.

I get that users think they get much value for low money, but it’s always bait and switch.

Sure (statistically) nobody cares, though.

Hell, Microsoft and Apple did the same thing decades ago. Microsoft offered computer discounts to high schools and colleges, so that the students would be used to (and demand) Microsoft when they went into the business world. Apple then undercut that by offering very discounted products to elementary and junior high schools, so that the students would want Apple products in higher education and the business world.

The tactic let them write off all the discounts on their taxes, but lock in customers and raise prices on business (and eventually consumer) goods.

I’m watching people in my industry (software development) who’ve bought into this crap forget how to code in real-time while they’re producing the shittiest garbage I’ve laid eyes on as a developer.

I just spent two days fixing multiple bugs introduced by some AI made changes, the person who submitted them, a senior developer, had no idea what the code was doing, he just prompted some words into Claude and submitted it without checking if it even worked, then it was “reviewed” and blindly approved by another coworker who, in his words, “if the AI made it, then it should be alright”

“if the AI made it, then it should be alright”

Show him the errors of his ways. People learn best by experience.

You’re right, they SHOULD be fired.

Management loves that they are using AI, they will probably get promoted if anything.

It’s being pushed very hard by management where I work and I’m consistently seeing the same as above. I mentioned on another thread recently that I’ve heard “I don’t know why Claude did that” multiple times over the past few weeks.

It’s infuriating.

The number of times I’ve been debugging something and a coworker messages “I asked ChatGPT and it said [obviously wrong thing]” makes me want to gouge my eyes out

Look for another work place as soon as you can. If not you will forever be in the position of being the cleaner of their shit and you will not gain anything by it. They will get their metrics going up and you will be known to be the slow guy because you care about fixing the code.

A scapegoat to shove that little responsibility (golden parachute) they get paid for so much on.

And students who are using it in school aren’t learning, because ChatGPT is doing all their work - badly - for them.

This is the one that really concerns me. It feels like generations of students are just going to learn to push the slop button for any and everything they have to do. Even if these bots were everything techbros claimed they are, this would still be devastating for society.

Well, one way or another it won’t be too many generations. Either we figure out it’s a bad idea or sooner or later things will go off the wheels enough that we won’t maintain the infrastructure to support everyone using this type of “AI”. Being kind of right 90% of the time is not good enough at a power plant.

Businesses have invested too much time, money and promises in AI to admit they made a mistake, now. And like all business models based on the Sunk Cost Fallacy, it’s going to do a lot of damage along the way before it finally dies.

Even one or two seems like it’d be catastrophic. And if nothing’s changed until they enter the workforce and start fucking shit up, I’d say that’s something like 10 years of teens becoming dependent on it and losing out on critical education and development (presuming worst case - no market crash). That’s a lot of damage.

I’ve been programming professionally for 25 years. Lately we’re all getting these messages from management that don’t give requirements but instead give us a heap of AI-generated code and say “just put this in.” We can see where this is going: management are convincing themselves that our jobs can be reduced to copy-pasting code generated by a machine, and the next step will be to eliminate programmers and just have these clueless managers. I think AI is robbing management of skills as well as developers. They can no longer express what they want (not that they were ever great at it): we now have to reverse-engineer the requirements from their crappy AI code.

but instead give us a heap of AI-generated code and say “just put this in.”

we now have to reverse-engineer the requirements from their crappy AI code.

It may be time for some malicious compliance.

Don’t reverse engineer anything. Do as your told and “just put this in” and deploy it. Everything will break and management will explode, but now you’ve demonstrated that they can’t just replace you with AI.

Now explain what you’ve been doing (reverse engineering to figure out their requirements), but that you’re not going to do that anymore. They need to either give you proper requirements so that you can write properly working code, or they give you AI slop and you’re just going to “put it in” without a second thought.

You’ll need your whole team on board for this to work, but what are they going to do, fire the whole team and replace them with AI? You’ll have already demonstrated that that’s not an option.

So in your case, not only is the LLM coding assistant not making you faster, it’s actively impeding your productivity and the productivity of your stakeholders. That sucks, and I’m sorry you’re having to put up with it.

I’m lucky that in my day job, we’re not (yet) forced to use LLMs, and the “AI coding platform” our upper management is trying to bring on as an option is turning out to be an embarrassing boondoggle that can’t even pass cybersecurity review. My hope is that the VP who signed off on it ends up shit-canned because it’s such a piece of garbage.

I’m watching people in my industry (software development) who’ve bought into this crap forget how to code in real-time while they’re producing the shittiest garbage I’ve laid eyes on as a developer.

Yes. Then I come on Lemmy and see a dedicated pack of heralds concurrently professing that they do the work of 10 devs while eating bon bons and everyone that isn’t using it is stupid. So annoying

God, that’s so frustrating. I want to shake them and shout, “No, your code is 100% ass now, but you don’t know it because it passes tests that were written by the same LLM that wrote your code! And you have barely laid eyes on it, so you’re forgetting what good code even looks like!”

“Cognitive amplifier?” Bullshit. It demonstrably makes people who use it stupider and more prone to believing falsehoods.

Demonstrably proven, too.

EEG revealed significant differences in brain connectivity: Brain-only participants exhibited the strongest, most distributed networks; Search Engine users showed moderate engagement; and LLM users displayed the weakest connectivity. Cognitive activity scaled down in relation to external tool use.

https://www.media.mit.edu/publications/your-brain-on-chatgpt/

I study mechatronics in Germany and I don’t avoid it. I have yet to meet a single person who is avoiding it. I have made tremendous progress learning with it. But that is mostly the case because my professors refuse to give solutions for the seminars. Learning is probably the only real advantage that I have seen yet. If you don’t use it for cheating or shorcuts, which is of course a huge problem. But getting answers to problems, getting to ask specific follow up questions and most of all researching and getting to the right information faster (through external links from AI) has made studying much more efficient and enjoyable for me.

I don’t like the impact on society AI is having bur personally it has really helped me so far. (discounting the looming bubble crises and the market effect it is having on memory f.e.)

Yeah, it is a tool, and it has to be used correctly. It also offers a trade off when you research some topics: You gain time, but slowly lose the ability to conduct the research yourself. If I don’t have time constraints I avoid AI, so I can maintain my skill of searching, categorizing, and piecing together information, which is a key skill in a fast moving industry (SW dev)

Also for learning I usually use it for follow-up questions, without a base understanding it can halicunate whatever and spoon feed it to my brain. Nothing can compete with an AI which designed to burp out the most sound phrases ever existed. Unfortunately correctness is not on par with it.

I often help my yunger sister, she wants to learn programing, and I noticed she uses extensive amount of AI. She can solve issues with the help of an AI but cannot solve it alone. At least its not Vibe coding, she uses it for sub-tasks. But I fear it hinders her learning.

I decided not to finish my college program partially because of AI like chatgpt. My last 2 semesters would have been during the pandemic with an 8 month work term before. Covid ended up canceling the work term and would give me the credit anyway. The rest of the classes would all be online and mostly multiple choice quizs. There wasn’t a lot of AI scanning tech for academic submissions yet either. I felt if i continued, I’d be getting a worse product for the same price (online vs in class/lab), wont get that valuble work experience, and id be at a disadvantage if i didnt use AI in my work.

Luckily my program had a 2 year of 3 year option. The first 2 years of the 3 year is the same so i just took the 2 year cert and got out.

Wym you would be at a disadvantage? College isn’t a competition. By not using AI in the learning process and submissions you might get a lower grade than others, but trust me no one fucking checks your college grades. They check if you know what you are doing.

In fact you wouldn’t get a lower grade, others would have an inflated grade which then won’t translate to skills and will have issues in the workforce.

It didn’t sit right with me. I made the deans list each semester before that for good grades. I wanted no speculation that my grades were influenced by AI in the next semester. In a competitive job market, making the deans list consistently could absolutely stand out among other candidates. It shows respect for deadlines and the education.

This has to be a bot - no student in the history of the universe has ever said this, ever

Not a bot. Both my internship interviews commented on me making the deans list.

Was AI really that big of a thing at the time of Covid?

It was just starting out around that time, hence why it wasn’t much of a concern in my earlier semesters. Plus they had better in class controls for cheating like monitoring computers and in person exams instead of online. You would have got an instant fail if you got caught using AI or plagarism on your projects.

So…he has something USELESS and he wants everybody to FIND a use for it before HE goes broke?

I’ll get right on it.

Nice paraphrasing!

It‘s insane how he says „we“ not as in „we at Microsoft“ but as in „Me, I and myself as the sole representative of the world economy say: Find use cases for my utterly destructive slop machine… or else!“

Tech CEOs have all gone mad by protagonist syndrome.

Well, he is the “money man”. He doesn’t DO any of the work himself, he “buys” workers.

He has NO skill, NO knowledge, NO training, NO license. Just money. All you need is money.

I was expecting something much worse but to me it deels like he’s saying “we, the people working on this stuff, need to find real use cases that actually justifies the expense” which is…pretty reasonable

Not defending him or Microsoft at all here but it sounds like normal business shit, not a CEO begging users to like their product

I mean, it would be a lot more reasonable if the entire tech industry hadn’t gone absolutely 100% all-in on investing billions and billions of dollars into the technology before realizing that they didn’t have any use cases to justify that investment.

Oh you don’t have to convince me it was a mistake, his comment just wasn’t what it’s being made out to be. “Find a use for it or we’re fucked” is a lot different than “please use our product or we’re fucked”

Idk I don’t really see much of a distinction really

I dunno, there’s a pretty big distinction. “Please use our shitty thing” vs “Please make our shitty thing better so people want to use it”

It’s placing “blame” on the industry leaders for failing to make something useful

But HE is the industry leader.

It’s not like it wasn’t him that sunk every successful product they still had in the last couple of years.

“Social permission” is one term for it.

Most people don’t realize this is happening until it hits their electric bills. Microslop isn’t permitted to steal from us. They’re just literal thieves and it takes time for the law to catch up.

[Microsoft are] just literal thieves.

Always have been.

(But now it’s worse because it’s the entire public, not just their competitors)

They aren’t Microsoft anymore, they’re full on Microslop.

One might say they‘re Macroslop.

you will enjoy your chatbot that confidently tells lies while electricity bill goes up by 50% and the nearby datacentres try to make the next model not use em-dashes

As a long-time user of the em-dash I’m pissed off that my usual writing style now makes people think I used AI. I have to second-guess my own punctuation and paraphrase.

We suffer together! At least my semicolon is still safe.

I don’t use LLMs for anything creative for the express purpose of avoiding generic tone, haha.

Yeah. I just wouldn’t feel comfortable putting my name to a slice of that dreary blandness.

How can you lose social permission that you never had in the first place?

The peasants might light their torches

Datacenters are expensive and soft targets.

Dude, building are pretty hard.

not OP but I believe they’re “soft” in the sense that they don’t have moats/high electric fences/battalions of armed guards around 24/7

With a clipboard you could probably just walk in and start unplugging things

That’s… Not quite true. Usually they take access quite seriously. If in a multi tenant space every space will be separated and the physical cages around the machines locked and monitored.

All the same they are designed to keep small numbers of mostly law abiding people out, not an angry mob with torches.

Challenge accepted

Agree. I live in Winnipeg, Canada, and I have visited local datacentres - anything built in the last twenty years would be very hard to physically penetrate with stealth alone.

There are older ones which might be a bit less sophisticated, but that’s not the norm.

Yeah but it’s really easy to hurt their feelings so be mindful

This guy knows how to translate billionaire dipshit speak.

“Torching” the gas turbines what are on AI companies datacenters would be highly effective. Especially since they are outside and only a fence protects them.

It is so dump what they gas our environment for “AI”. It was evil doing it in WW1 and WW2 and it is still today. See:

- https://www.theguardian.com/technology/2026/jan/15/elon-musk-xai-datacenter-memphis

- https://capitalbnews.org/musk-xai-memphis-black-neighborhood-pollution/

It is insane.

There’s a latency between asking for forgiveness and being demanded to stop.

It’s easier to beg for social forgiveness than it is to ask for social permission

The whole point of “AI” is to take humans OUT of the equation, so the rich don’t have to employ us and pay us. Why would we want to be a part of THAT?

AI data centers are also sucking up all the high quality GDDR5 ram on the market, making everything that relies on that ram ridiculously expensive. I can’t wait for this fad to be over.

Not to mention the water depletion and electricity costs that the people who live near AI data centers have to deal with, because tech companies can’t be expected to be responsible for their own usage.

I mean, do you really think it’s better idea to let them build their own water and power system separate?

They should be forced to upgrade the existing infrastructure so everyone benefits.

They should be forced to upgrade existing infrastructure and pay for it. They are refusing to pay for it, and the electric companies are passing the costs onto the residents near these data centers, which is grossly unfair.

They should be forced to build closed loop systems and green energy sources.

I’d love to take humans out of the equation of all work possible. The problem is how the fascist rulers will treat the now unemployed population.

Yep. Ideal future is robots do all the work for us while we enjoy life.

But realistic future is rich people enjoy life while normal people starve.

Don’t forget the few “lucky” people doing the most disagreeable of jobs because they’re still hard to automate and the starving population provides a cheap desperate pool of labour to do even the most dangerous and unhealthy jobs for a pittance.

The rich seem to be common evil denominator. It is time to act against them. Worldwide.

I have to agree, even if i have no issue with GenAI itself. No one needs that many datacenters as they are planning. Adoption will crash as soon as they try monetizing it for real. Even if they try using cloud gaming as a load in those centers - not one person i know would trade their local PC for something that’s dependent on a fast internet connection without data caps and introduces permanent 100ms+ delay on all games.

I swapped my 3070Ti 8GB to a 5070 16GB, if i sell off the 3070TI the upgrade cost me 300€ (but i tend to keep it as a backup), and I can run my local GenAI and LLM without issues now, I don’t need datacenters, i need CDNs so i can get my content i run locally. and TBH if they really try to kill local compute in gaming: i have enough games here to last me for a decade or more without getting bored, and i can play all of that while sitting in a mountain cabin.

To point out they already tried this for gamers specifically with GeForce now, stadia, etc and its not exactly a cash cow. Not sure why they think a whole pc is preferable.

I mean, this is literally an argument against using oxen to plough fields instead of doing it by hand.

The answer is always that society should reorient around not needing constant labour and wealth being redistributed.

- Denial

- Anger

- Bargaining <- They’re here

- Depression

- Acceptance

The five stages of corporate grief:

- lies

- venture capital

- marketing

- circular monetization

- private equity sale

Where do the three envelopes fit in

Roll 2d6 on private equity sale. 7 or higher and you get to ride again with an IPO at position 6. 1-6 and you get to fill out the envelopes.

In my pocket

Denial: “AI will be huge and change everything!”

Anger: “noooo stop calling it slop its gonna be great!”

Bargaining: “please use AI, we spent do much money on it!”

Depression: companies losing money and dying (hopefully)

Acceptance: everyone gives up on it (hopefully)

Acceptance: It will be reduced to what it does well and priced high enough so it doesn’t compete with equivalent human output. Tons of useless hardware will flood the market, china will buy it back and make cheap video cards from the used memory.

Which seems like good progress. I feel like they were in denial not three weeks ago.

May the depression be long lasting and heartfelt in the United States of AI.

Correct, but needs clarification:

Depression referring to the whole economy as the bubble burst.

Acceptance is when the government accepts to bail them out because they’re too big and the gov is too dependent on them to let them die.

“Microsoft thinks it has social permission to burn the planet for profit” is all I’m hearing.

Well, they at least have investor permission…which is the only people they care about anyway

Probably in the Hobbes sense that they’re not actively revolting

“social permission”?

Society didn’t even permit you and others to spread AI onto everyone to begin with.

I don’t think there’s a single data center anywhere that a significant amount of locals are even ambilivient a out, let alone support…

In pretty much every place, they’re getting massive tax breaks citizens pay for, and cheaper energy prices because citizens will pay the higher cost due to increased demand of the data center.

We need to seize all this shit from corps.

Stop fucking around, once trump is handled we need to nationalize a whole lot of shit that’s been privatized the last 50 years.

I’ve also read reports that the noise levels and pollution coming out of these things is staggering. Not to mention they appear to be built as quickly as possible with little regard for laws and regulations.

Fucks the water up too.

People that live too close turn their taps open and can get barely a trickle, sometimes literally nothing.

Because the data centers are sucking up potable water for cooling because it’s cheaper to run it wild open no matter what than paying for a sustainable cooling system up front.

That’s mainly Grok, since Elon did never even bother getting approval for the shitton of Gas Turbines he placed which wreak havoc on the air quality in Memphis. I think xAI is the only Datacenter running on 100% fossil fuels, without permission from any municipality…

Interesting! I was under the impression all data centers were causing similar problems, but leave it to the X Man to go the extra mile to really fuck things up. That piece of shit has unlimited money and still pulls shit like this.

Just to add to what you said, it appears Musk is in violation of EPA standards and they may be doing something about it: https://www.techspot.com/news/110971-epa-shuts-down-xai-off-grid-turbine-loophole.html

Data centers should be demolished by act of a real leader if we ever get one. They got this data by corrupt means perverting our laws and regulators. They deserve to lose their entire investments, that information is a threat to society and we should not allow tech lords to control it.

demolish

Fuck that, repurpose them. Even if you just break down the tech components and use the buildings for something unrelated.

They deserve to lose their entire investments

Yes, nationalization involves the seizing of resources…

I’m just saying there’s no reason to destroy anything

High Performance Compute for medical research to go with the free healthcare we should also have in this scenario.

That information is a danger in the hands of government. Burn the motherfuckers down. There is no legitimate need for that much computing power, all devoted to enslaving us.

You can’t even exfiltrate data reliably from a LLM - storage can be deleted, RAM is volatile anyways. No need for destruction at all, the compute itself is useful. There are so many science projects that currently don’t have the funding to get access to exactly the hardware that’s in use there.

techbros don’t understand consent

As opposed to legal permission, which, hahahahaha

In English: “they’re talking about guillotines a lot”

Translation: Microslop’s executives are finally starting to realize that they fucked up.

Let’s just say AI truly is a world-changing thing.

Has there ever been another world-changing thing where the sellers of that thing had to beg people to use it?

The applications of radio were immediately obvious, everybody wanted access to radios. Smart phones and iPods were just so obviously good that people bought them as soon as they could afford them. Nobody built hundreds of km of railroads then begged people to use them. It was hard to build the railways fast enough to keep up with demand.

Sure, there have been technologies where the benefit wasn’t immediately obvious. Lasers, for example, were a cool thing that you could do with physics for a while. But, nobody was out there banging on doors, begging people to find a use for lasers. They just sat around while people fiddled with them, until eventually a use was found for them.

Literally burning the planet with power demand from data centers but not even knowing what it could possibly be good for?

That’s eco-terrorism for lack of a better word.

Fuck you.

Social permission? I dont remember that we had a vote or something on this bullshit.

The oligarch class is again showing why we need to upset their cart.

As far as I can tell there hasn’t been any tangible reward in terms of pay increase, promotion or external recruitment from using the cognitive amplifier.

you never had it to begin with. Goddamn leeches.